Thinking about an economy of bit flips

Last updated: Dec 17, 2022

Disclaimer: un-researched, under-educated, fast and loose hacking follows.

The other day I was thinking about counting in binary and how bits cascade when incrementing a counter.

000

001

010

011

100

I wondered: what if each bit flip was very “expensive”, for the hardware say? Are there other methods we could use that woud result in a “cheaper” increment operation, by avoiding those costly carries?

First, here’s a little bit of boring code that we’ll use below to print a list of Ints as padded binary, and some requisite imports:

import Data.Bits

import Data.Word

import Numeric

import Text.Printf

import Data.Char

prettyPrintBits :: (Integral i, Show i)=> [i] -> IO ()

prettyPrintBits = mapM_ putStrLn . prettyListBits

prettyListBits :: (Integral i, Show i)=> [i] -> [String]

prettyListBits l = map (printPadded . toBin) l where

toBin i = showIntAtBase 2 intToDigit i ""

printPadded = printf ("%0"++width++"s")

width = show $ ceiling $ logBase 2 $ (+1) $ fromIntegral $ maximum l

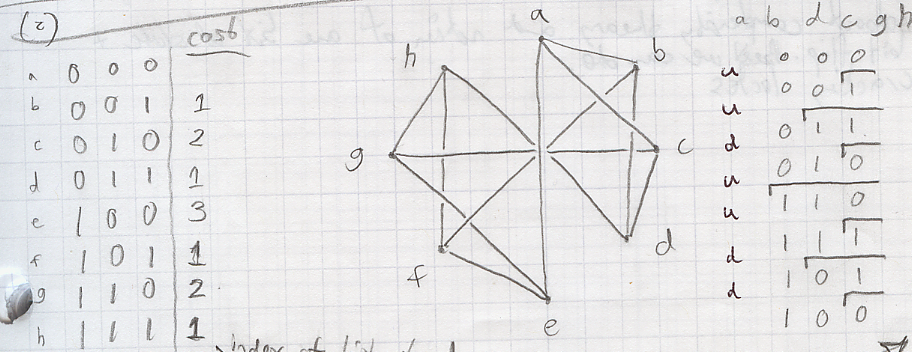

Anyway I started playing with this by hand, with a 3-bit example, and drawing funny pictures like these:

On the left is a table with the cost, in bit-flips, to increment a binary counter to that number, from the previous.

Then (in the middle of the pic above) I drew an undirected graph with edges connecting the numbers that differed by only a single bit (lots of symmetry there, no?); so what we’d like to do is walk that graph without repeating, to cycle through all the 3-bit combinations as “efficiently” as possible.

After doodling for a second I found a nice symmetrical path through the graph, and wrote the numbers we pass through in sequence (on the right side of the pic above).

Okay, but can we write a program to generate that sequence? And generalize it?

Generating Sequences

After I marked the bit-flip locations in the “bit-economical” binary sequence, I noticed the pattern from top to bottom is the same as what we’d use to build a binary tree from an ordered list, numbered from the bottom up.

Furthermore glancing back to my original table of “increment costs” I noticed they follow the same pattern; it turns out we can use a few simple bitwise operations on a normal binary count to enumerate all n-bit numbers with optimally-efficient bit flips. The algorithm is:

- start the sequence at 0

- for a count 0,1,2.. do

- find the difference (

XOR) of adjacent numbers - find the number of

1s in the result (POPCNT) - flip the bit at that offset to get to the next number in the sequence

- find the difference (

Here’s an implementation in haskell that represents an infinite (sort of) efficient bit count:

lazyBits :: [Word]

lazyBits = scanl complementBit 0 bitsToFlip where

ns = [0..] :: [Word]

bitsToFlip = zipWith (\x-> subtract 1 . popCount . xor x) ns $ tail ns

We can play with this & pretty-print the result:

*Main Text.Printf> take (2^3) $ lazyBits

[0,1,3,2,6,7,5,4]

*Main Text.Printf> prettyPrintBits $ take (2^3) $ lazyBits

000

001

011

010

110

111

101

100

Aside: Applications and variations

This is similar to de Bruijn sequences and I’m sure this sort of sequence has some interesting applications. For instance just as with de Bruijn sequences I could see this being useful in cracking an imaginary lock consisting of toggling push-button digits.

But most real locks like that have buttons that lock in place and have a single

“reset” button. To model those we could do something similar to what we just

did, only we’d want to study a directed graph where we only ever have edges

resulting from flipping a 0 to a 1, and all numbers have an edge back to

0.

Back to thinking about counting

We’ve gotten side-tracked a bit. We started wanting to find a more efficient binary number system for incrementing, but is that what we got?

Well, no. In particular we have no algorithm for incrementing an arbitrary

number in our sequence. For instance, given only the number 010 we have

no way (that I’ve been able to figure out) of knowing that the left-most bit

should be flipped. In other words we need some amount of state to determine

the next bit to be flipped. At least that’s my assumtion.

Comparison

One thing we do have is a method for comparing numbers in the sequence, something that’s pretty much essential if you want to do anything with a counter.

Here is some code to do just that, defined here in terms of bit strings (as

output by prettyListBits); I haven’t figure out a clever set of bitwise ops

to do this, and have a somewhat foggy idea of why this works:

compareNums :: String -> String -> Ordering

compareNums [] [] = EQ

compareNums ('0':as) ('0':bs) = compareNums as bs

compareNums ('1':as) ('1':bs) = invert $ compareNums as bs

where invert EQ = EQ

invert GT = LT

invert LT = GT

compareNums (a:_) (b:_) = compare a b

compareNums _ _ = error "this assumes 0-padded, aligned numbers"

Counting, amortized increment cost, state

So how much does it really cost on average to increment an arbitrary number in the usual binary number system? We know it’s more than one, but we need a function from bit length to average cost.

To do that efficiently we’ll once again use the pattern we discovered above when we sketched out a 3-bit example. Looking at the way the different costs break down we have:

4 operations at cost 1

2 operations at cost 2

1 operation at cost 3

…out of a total of 8 - 1 increment operations, so the average cost is:

(4*1 + 2*2 + 1*3) / (8 - 1) = 1.5714

So a general function for b bits would look like:

amortizedIncrCost :: Integer -> Double

amortizedIncrCost b = avgd $ sum $ zipWith (*) (iterate (2*) 1) [b,b-1 ..1]

where avgd n = (fromIntegral n) / (2^b - 1)

We’d like to know how this behaves as our number of bits b approaches

infinity. We could take the limit of the function, but who knows how to do

that, so let’s just experiment:

*Main> amortizedIncrCost 3

1.5714285714285714

*Main> amortizedIncrCost 4

1.7333333333333334

*Main> amortizedIncrCost 8

1.968627450980392

*Main> amortizedIncrCost 16

1.9997558556496529

*Main> amortizedIncrCost 32

1.9999999925494194

*Main> amortizedIncrCost 64

2.0

Seems quite clearly to approach 2 as we exceed the accuracy of our Double,

i.e. the average cost to increment an arbitrary-size binary counter is

two bit flips. Interesting!

Remember above how we mentioned our counting system required some additional state to determine the next bit to flip? Well a single bit is the smallest unit of state we know about; therefore even if we could figure out how to determine bit-flips with a single bit of state, or could derive a way of calculating the offset from the number itself, we’d still never be able to do better than two bit-flips per increment.

This is probably all pretty obvious information theory stuff, but was a fun little diversion.